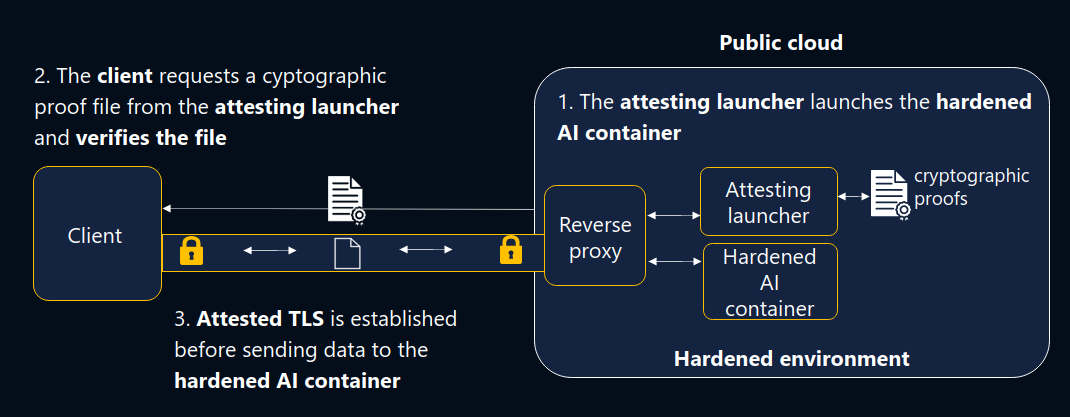

Architecture

BlindLlama is composed of two main parts:

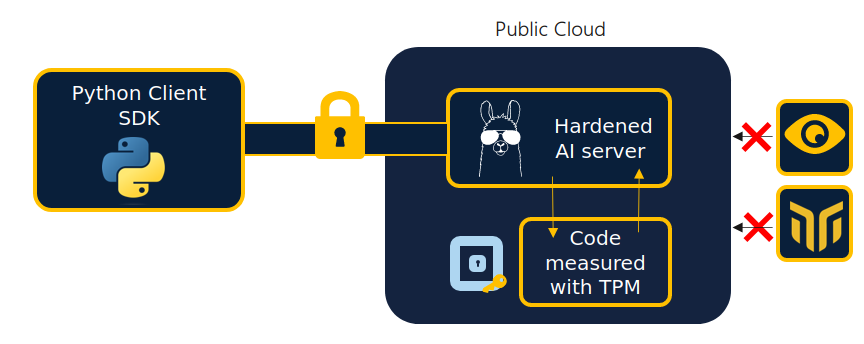

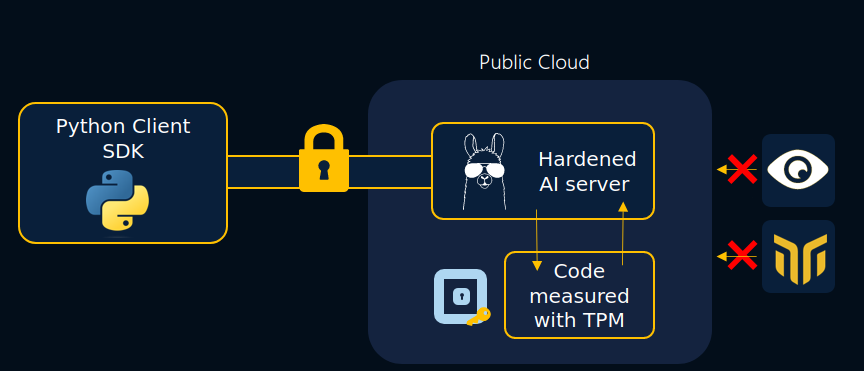

- An open-source client-side Python SDK that verifies the remote AI models we serve are indeed guaranteeing data sent is not exposed to us.

- An open-source server made up of three key components which work together to serve models without any exposure to the AI provider (Mithril Seucirty). We remove all potential server-side leakage channels from network to logs and provide cryptographic proof that those privacy controls are in place using TPMs.

Client

The client performs two main tasks:

- Verifying that the server it communicates with is the expected hardened AI server using attestation.

- Securely sending data to be analyzed by a remote AI model using attested TLS to ensure data is not exposed.

Server

The server is split into three components:

- The hardened AI container: This element serves our AI API in an isolated hardened environment.

- The attesting launcher: The launcher loads the hardened AI container and creates a proof file which is used to verify the API's code and model using TPM-based attestation.

- The reverse proxy: The reverse proxy handles communications to and from the client and the container and launcher using atested TLS.